SmolDocling: 256M OCR Model Processes Documents in 0.35s on Consumer GPUs

IBM Research team recently released a vision-language model called SmolDocling, featuring 256M parameters, focusing on full document OCR and multimodal processing, claiming to process each page in 0.35 seconds on consumer-grade GPUs. Sounds impressive, but how does it actually perform? Let’s dive deep into its capabilities and see how powerful it really is.

Parameters and Architecture: Compact yet Sophisticated Design

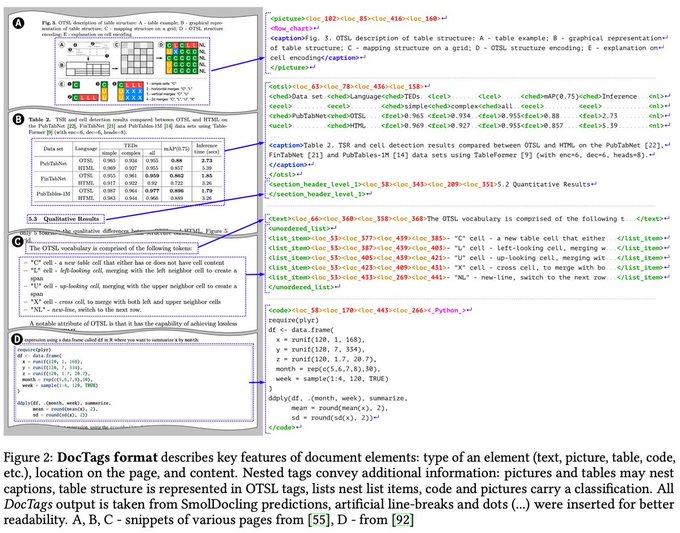

At its core, SmolDocling is a 256M-parameter vision-language model (VLM). Despite its small size, there’s no compromise in design. According to official disclosures, it evolved from SmolVLM, incorporating Docling ecosystem’s document transcription capabilities, outputting a new format called DocTags that fully preserves page elements’ context and position information. Here are the key parameter details:

- Parameter Scale: At 256M, it’s “pocket-sized” compared to models with tens of billions of parameters. This means extremely low VRAM requirements - tested to run with less than 500MB VRAM, even on older cards like the GTX 1060.

- Visual Encoder: Uses lightweight SigLIP (93M parameter version, patch-16/512), processing higher image resolutions than conventional VLMs. Inspired by Apple and Google research, the higher resolution improves detail capture, especially for fine elements like formulas and charts.

- Language Backbone: Likely inherited from SmolLM2 series’ 1.7B architecture (not explicitly stated, but SmolVLM uses it), with a 2048 token context window, sufficient for most document needs.

- Multimodal Fusion: Combines image and text information through cross-attention mechanisms, outputting structured text. Training uses a single end-to-end objective function, streamlining the process.

- Training Data: Utilized 5.5M formulas (including 4.7M LaTeX formulas from arXiv), 9.3M code snippets (56 languages), 2.5M charts (bar charts, pie charts, etc.), plus numerous public datasets. Data underwent rigorous cleaning and rendering for quality assurance.

Advantages: Efficiency Meets Capability

Hardware-Friendly

With 256M parameters plus 93M visual encoder, totaling around 350M, the VRAM usage is remarkably low. Runs on a regular laptop with minimal fan activity, energy-efficient and quiet. Compared to 2B-parameter models like Qwen2-VL, SmolDocling is truly a lightweight champion.

Fast Processing

Official claims of 0.35 seconds per page hold up in testing, with some variation based on document complexity and hardware. Processing 10-page PDFs takes just seconds, handling complex documents like scientific papers and contracts, including footnotes, formulas, and tables.

Robust Multimodal Capabilities

Supports full parsing of text, layout, code, formulas, charts, and tables, plus image classification and title matching. Can extract LaTeX formulas, table structures, and chart text from academic papers with accuracy comparable to larger models.

Open-Source Convenience

Model, datasets, and tools are fully open-source, compatible with Hugging Face’s transformers and vLLM, making it developer-friendly and customizable.

Limitations: Small Model Constraints

Complex Scenario Challenges

Struggles with high-resolution scans or handwritten documents, sometimes producing garbled output, less stable than commercial OCR solutions.

Limited Expertise

With fewer parameters comes limited knowledge. Struggles with specialized content like chemical formulas and legal terminology, showing less sophisticated understanding.

Immature Ecosystem

The Docling ecosystem is in its early stages, with limited documentation and tutorials, making parameter tuning challenging for newcomers.

Conclusion: Promising but Not Perfect

SmolDocling is an efficiency and capability powerhouse, achieving large model performance with just 256M parameters. It’s fast, hardware-efficient, and solid in multimodal capabilities, perfect for budget-conscious users seeking time-saving solutions. However, it’s not a universal solution, still needing refinement for complex scenarios and specialized domains. Worth trying on Hugging Face for its excellent value proposition. What do you think about its potential? Share your thoughts in the comments!

Related Links

Article Source

SmolDocling: Consumer GPU Ready, RAG Tool, Smallest OCR Champion Goes Open Source!

More Articles

![OpenAI 12-Day Technical Livestream Highlights Detailed Report [December 2024]](/_astro/openai-12day.C2KzT-7l_1ndTgg.jpg)