Alibaba's QwQ-32B: 32B small parameters Rivaling DeepSeek R1's 671B, Reshaping Open-source AI Landscape?

In the battlefield of AI large language models, the open-source community never lacks excitement and surprises. Today, Alibaba Cloud’s Tongyi Qianwen team dropped a bombshell—QwQ-32B, a reasoning model with only 32B parameters that claims performance comparable to DeepSeek R1 (671B parameters). This is not just a hardcore technical showdown but a bold challenge to the efficiency limits of AI models.

Small Size, Big Power: How Can 32B Parameters Match 671B?

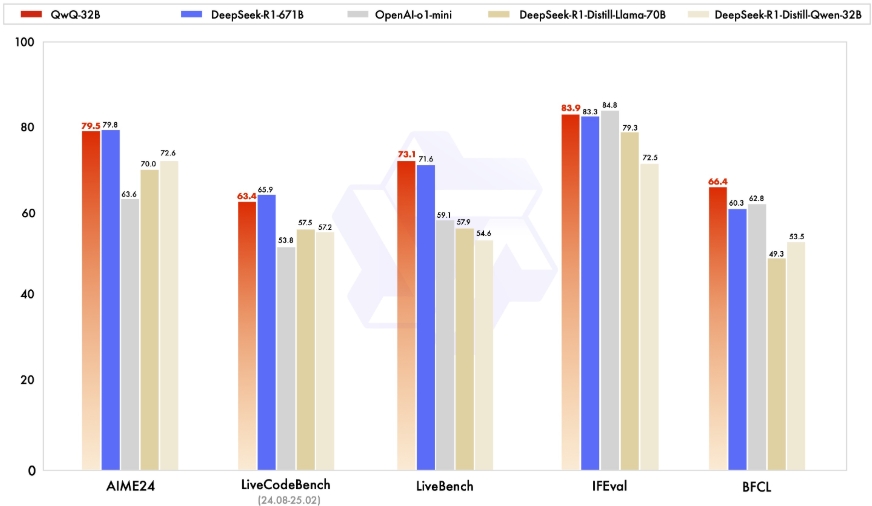

If DeepSeek R1 is the “giant” among reasoning models, dominating mathematics, programming, and logical reasoning with its 671B parameters (37 billion activated), then QwQ-32B is a “small but beautiful” outlier. According to Alibaba Cloud’s official data, QwQ-32B performs on par with DeepSeek R1 in AIME24 mathematics evaluation and LiveCodeBench programming tests, even slightly outperforming in LiveBench and IFEval general capability tests. More impressively, it has only 1/21 of DeepSeek R1’s parameters.

Behind this achievement, Alibaba deployed their secret weapon: large-scale reinforcement learning (RL). Unlike the traditional pre-training plus fine-tuning routine, QwQ-32B skipped the supervised fine-tuning (SFT) phase entirely, honing its reasoning abilities through dynamic interaction with the environment. This “pure RL” approach not only broke the ceiling for small parameter models in reasoning tasks but also demonstrated impressive computational efficiency. The Tongyi Qianwen team even declared: “This is just our first step on the reinforcement learning path, with more surprises to come.”

Open-source Evolution: QwQ-32B’s Ambition and Trump Cards

Alibaba has maintained a rapid pace in the open-source AI field. From the Qwen series to Qwen2.5, and now QwQ-32B, the Tongyi Qianwen team has been challenging industry perceptions almost monthly. This time, QwQ-32B not only delivers hardcore performance but also continues Alibaba’s open-source tradition, freely available under the Apache 2.0 license. Developers can directly download and experience it on Hugging Face and ModelScope.

In contrast, while DeepSeek R1 is also an open-source benchmark, its 671B parameters demand extremely high hardware requirements—running it locally requires a top-tier GPU cluster. QwQ-32B is different; 3.2 billion parameters mean lower deployment barriers, running smoothly even on consumer-grade GPUs like the RTX 4090. This is undoubtedly a major benefit for small and medium-sized developers and research institutions.

More notably, QwQ-32B comes with built-in Agent capabilities, allowing it to use tools during reasoning and dynamically adjust its approach based on feedback. This “critical thinking” design makes it behave more like an “intelligent assistant” in complex scenarios rather than just an answer generator. Alibaba Cloud states this is just their initial attempt towards AGI (Artificial General Intelligence), with plans to combine stronger base models with RL to explore the limits of long-term reasoning.

Head-to-Head: QwQ-32B vs DeepSeek R1, Who’s the King of Reasoning?

Let the data speak. When releasing QwQ-32B, Alibaba Cloud showcased a report card: in the AIME24 math test, QwQ-32B matched DeepSeek R1, far surpassing OpenAI’s o1-mini; in LiveCodeBench programming tasks, the two scored neck-and-neck; in instruction following (IFEval) and tool calling (BFCL) tests, QwQ-32B even held a slight edge.

However, this doesn’t mean QwQ-32B has completely overshadowed DeepSeek R1. The latter, with its massive parameter count, still maintains advantages in long text processing and multilingual capabilities. QwQ-32B’s current limitation is its focus on mathematical and programming reasoning, with somewhat thinner general knowledge reserves. Nevertheless, considering the vast difference in parameter scale, QwQ-32B’s price-performance ratio is already impressive enough.

On X (formerly Twitter), users are actively discussing this “32B vs 671B” showdown. Some marvel: “Is Alibaba rewriting AI rules with reinforcement learning?” Others analyze more coolly: “DeepSeek R1’s size suits heavy-duty tasks; QwQ-32B’s lightweight approach is more practical.” Either way, this face-off has raised the intensity of open-source AI competition to a new level.

The Next Act in Open-source Ecosystem: Alibaba and DeepSeek’s “Dual Giants”

The emergence of QwQ-32B isn’t just Alibaba’s technical showcase but appears to be a shot in the arm for China’s AI open-source ecosystem. Over the past year, DeepSeek has frequently made headlines with its V3 and R1 series, gaining prominence among global developers for its low cost and strong performance. Now, Alibaba’s forceful entry with QwQ-32B shows they’re not content to let DeepSeek stand alone.

Their competition reflects two paths in open-source AI: DeepSeek takes the “big and complete” route, pursuing ultimate performance through MoE (Mixture of Experts) architecture and massive parameters; Alibaba leans towards “small but precise,” mining small model potential through reinforcement learning. This differentiated approach might offer developers more choices while accelerating industry innovation.

Final Thoughts: Is AI’s Future in Open Source?

From QwQ-32B to DeepSeek R1, Chinese AI companies’ rapid advance in the open-source track is drawing global attention eastward. At the launch event, the Alibaba Cloud team revealed their goal isn’t just to match existing benchmarks but to explore AGI boundaries through reinforcement learning and Agent technology. And QwQ-32B is just the starting line of this marathon.

For developers, this is undoubtedly a golden era. QwQ-32B’s lightweight design and open-source nature lower AI implementation barriers, while DeepSeek’s hardcore performance enables advanced applications. The next question is: who will have the last laugh in this “32B vs 671B” competition? Or rather, where will the next breakthrough in open-source AI emerge?

Related Links

More Articles

![OpenAI 12-Day Technical Livestream Highlights Detailed Report [December 2024]](/_astro/openai-12day.C2KzT-7l_1ndTgg.jpg)