LLM-Reasoner: Make Your Language Model Think Deeply Like DeepSeek R1

3 min read

Introduction

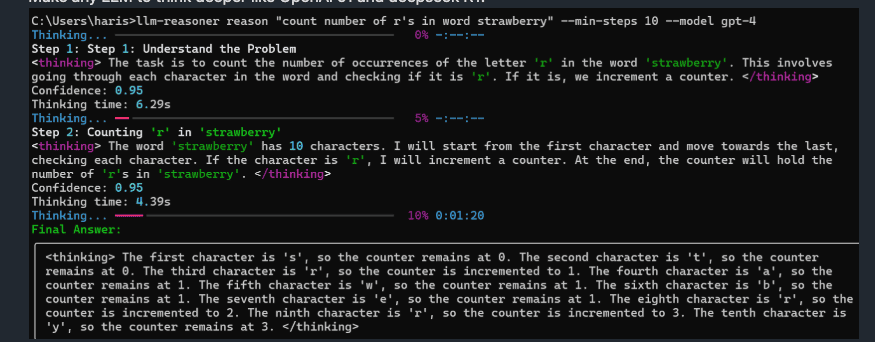

In the AI field, Large Language Models (LLMs) are becoming increasingly sophisticated, with models like DeepSeek R1 demonstrating advanced reasoning capabilities. If you want to enhance your LLM’s reasoning process to match or emulate these complex models, LLM-Reasoner is the tool you need. This tool enables any LLM to perform step-by-step reasoning, demonstrating how conclusions are reached, just like DeepSeek R1’s transparent approach.

What Makes LLM-Reasoner Unique

- Step-by-Step Reasoning: Unlike traditional LLMs that might directly provide answers without explaining the process, LLM-Reasoner breaks down complex problems into understandable reasoning steps, providing transparency in decision-making.

- Real-Time Progress Animation: Watch the reasoning process evolve in real-time, making it both informative and engaging.

- Multi-Vendor Compatibility: Through integration with LiteLLM, LLM-Reasoner supports various LLM providers, enhancing flexibility.

- User-Friendly Interface: Streamlit-based UI for easy interaction, along with a Command Line Interface (CLI) for script-writing users.

- Confidence Tracking: Each reasoning step comes with a confidence score, letting users understand the model’s certainty.

Quick Start

1. Installation

pip install llm-reasoner2. Configure API Keys

# OpenAI

export OPENAI_API_KEY="sk-your-key"

# Anthropic

export ANTHROPIC_API_KEY="your-key"

# Google Vertex

export VERTEX_PROJECT="your-project"3. Basic Commands

# View available models

llm-reasoner models

# Perform reasoning on a query

llm-reasoner reason "How do airplanes fly?" --min-steps 5 # min-steps: minimum number of reasoning steps, ensures thorough reasoning

# Launch interactive UI

llm-reasoner uiKey Parameter Explanations:

min-steps: Set minimum number of reasoning steps, range 1-10, default 3. Higher values mean more detailed reasoningmax-steps: Set maximum number of reasoning steps, range 2-20, default 8. Prevents overly lengthy reasoningtemperature: Controls output randomness, range 0-2, default 0.7. Lower values mean more deterministic outputtimeout: Reasoning timeout (seconds), default 30 seconds. Adjust based on problem complexity

SDK Usage Guide

Basic Example

from llm_reasoner import ReasonChain

import asyncio

async def main():

# Create reasoning chain instance, configure core parameters

chain = ReasonChain(

model="gpt-4", # Model to use, supports major LLMs

min_steps=3, # Minimum reasoning steps, ensures reasoning depth

temperature=0.2, # Temperature parameter, controls output randomness

timeout=30.0 # Reasoning timeout, in seconds

)

# Asynchronously generate reasoning steps with rich metadata

async for step in chain.generate_with_metadata("Why is the sky blue?"):

print(f"Step {step.number}: {step.title}")

print(f"Thinking time: {step.thinking_time:.2f} seconds") # Time per step

print(f"Confidence score: {step.confidence:.2f}") # Model's confidence in this step

print(step.content) # Reasoning content

asyncio.run(main())The returned step object contains these important attributes:

number: Current reasoning step numbertitle: Title summary of this stepthinking_time: Thinking time for this stepconfidence: Model’s confidence level in this step’s conclusion (0-1)content: Detailed reasoning content

Advanced Features

Metadata Tracking

Each reasoning step includes metadata like confidence levels and processing times, allowing for deeper analysis.

Custom Model Registration

# Python API

from llm_reasoner import model_registry

model_registry.register_model(name="my_model", provider="my_provider", context_window=8192)# CLI method

llm-reasoner register-model my_model azure --context-window 16384Reasoning Chain Customization

chain = ReasonChain(

model="claude-2", # Choose the model to use

max_tokens=750, # Maximum tokens per reasoning step, affects output length

temperature=0.2, # Temperature parameter: 0-2, lower means more deterministic

timeout=30.0, # Reasoning timeout: adjust based on problem complexity

min_steps=5 # Minimum reasoning steps: ensures thorough reasoning

)

chain.clear_history() # Clear history, start new reasoning sessionCore Parameter Explanations:

model: Model name to use, ensure corresponding API key is configuredmax_tokens: Controls maximum output length per reasoning step, recommended 300-1000temperature: Lower values mean more stable, predictable output; higher values mean more creativetimeout: In seconds, increase for complex problemsmin-steps: Forces more detailed reasoning, but increases API call costs

Model Support

LLM-Reasoner supports models from these providers:

- OpenAI

- Anthropic

- Azure

- Custom models

UI Usage Guide

- Launch the UI:

llm-reasoner ui- In the interface:

- Select appropriate model

- Adjust relevant settings

- Input query question

- Watch the reasoning process unfold in real-time

Important Notes

- The project depends on litellm for LLM models and APIs, so please refer to the litellm official documentation for specific models and providers

- When debugging locally, remember to set the corresponding environment variables for your LLM provider

More Articles

![OpenAI 12-Day Technical Livestream Highlights Detailed Report [December 2024]](/_astro/openai-12day.C2KzT-7l_1ndTgg.jpg)

OpenAI 12-Day Technical Livestream Highlights Detailed Report [December 2024]

Understanding Core AI Technologies: The Synergy of MCP, Agent, RAG, and Function Call

AI Model Tools Comparison How to Choose Between SGLang, Ollama, VLLM, and LLaMA.cpp?

Ant Design X - React Component Library for Building AI Chat Applications

CES 2024 Review:Revisiting the Tech Highlights of 2024

VLC Automatic Subtitles and Translation (Based on Local Offline Open-Source AI Models) | CES 2025

Chrome(Chromium) Historical Version Offline Installer Download Guide

ClearerVoice-Studio: A One-Stop Solution for Speech Enhancement, Speech Denoising, Speech Separation and Speaker Extraction

CogAgent-9B Released: A GUI Interaction Model Jointly Developed by Zhipu AI and Tsinghua