DeepSeek V3.1: Hybrid Reasoning, Strong Coding & Agent Power, Claude Code Support

DeepSeek V3.1 was quietly released in August 21, 2025 as an iteration of the V3 family. It significantly improves programming, reasoning efficiency, and Agent capabilities, marking an important step toward the Agent era. With excellent performance and highly competitive cost, it has gained strong attention across the open-source AI community.

Key Highlights

- Hybrid Reasoning Architecture: A single model supports both “Think” and “Non‑Think” modes. Users can toggle via the “Deep Thinking” control to balance deep reasoning with quick responses—use deep thinking for complex problems and fast mode for simple tasks to avoid unnecessary compute.

- Excellent Programming Performance: DeepSeek V3.1 performs strongly on multiple coding benchmarks. On the Aider benchmark, it reaches a 71.6% pass rate, surpassing top proprietary models like Claude Opus. Notably, the reported total cost to reach this level is around $1—roughly 68× cheaper than comparable Claude Opus performance.

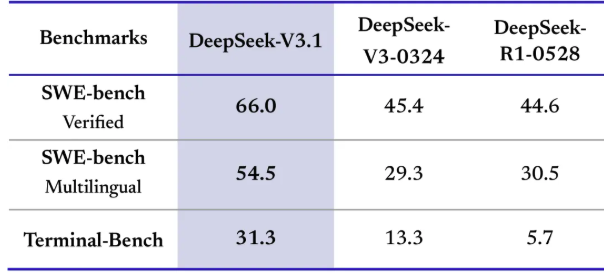

- Stronger Agent Abilities: Post-training optimizations substantially improve tool use and agentic tasks (e.g., code repair and search). On SWE (code fix) and Terminal‑Bench (complex CLI tasks), V3.1 shows clear gains over prior DeepSeek models.

- Value & Open Source: With 685B parameters and a 128K context window, V3.1 uses a Mixture‑of‑Experts design that activates ~37B parameters per token. This keeps inference costs low while sustaining high performance. The base models are open‑sourced on Hugging Face.

- Anthropic API Compatibility: Newly supports Anthropic API request/response formats, making it easy to plug DeepSeek V3.1 into the Claude Code framework and its ecosystem, reducing migration and integration costs. See the integration guide.

Performance & Benchmarks

DeepSeek V3.1 demonstrates strong capabilities across benchmarks:

- Programming & Code: Scores 71.6% on the multilingual Aider benchmark, ahead of Claude 4 Opus. Real‑world trials show it handles complex coding tasks well (e.g., 3D particle galaxy with Three.js), though UI polish may still improve.

- Reasoning: In a basic reasoning probe, the model failed to distinguish “the dead” from “the living,” yet it performs reasonably on logic and word‑count tasks. According to the team, after chain‑of‑thought compression, V3.1‑Think maintains average parity with R1‑0528 while reducing output tokens by 20%–50% and responding faster.

- Multi‑step Reasoning & Search: The team reports major gains over R1‑0528 on browsecomp (complex search) and HLE (expert‑level multidisciplinary problems).

Comparison with Other Models

Compared with leading models like GPT‑4o and Claude Opus, DeepSeek V3.1 is highly competitive—especially on coding and cost‑efficiency. Some evaluations note GPT‑4o may still lead on certain general tasks and advanced math, but V3.1 can contend in programming and reasoning workloads. While programming is a standout, some users find creative writing quality still has room to grow.

How to Use

You can access DeepSeek V3.1 via multiple channels:

- Web & App: Official clients have upgraded to V3.1, including the “Deep Thinking” feature.

- API: Use

deepseek-chatfor non‑thinking mode anddeepseek-reasonerfor thinking mode. Both now support a 128K context window. Anthropic API compatibility enables plug‑and‑play integration with the Claude Code framework, minimizing adaptation effort. - Open Source: Base models are available on Hugging Face for researchers and developers.

In short, DeepSeek V3.1 delivers major advances in programming and agentic abilities. Its hybrid reasoning design and outstanding cost‑performance offer a compelling option for enterprises and developers, while further energizing the open‑source community.

More Articles

![OpenAI 12-Day Technical Livestream Highlights Detailed Report [December 2024]](/_astro/openai-12day.C2KzT-7l_1ndTgg.jpg)