Qwen-TTS by Alibaba Cloud: Multi-Dialect TTS Model & Fast API for Natural Speech Synthesis

2 min read

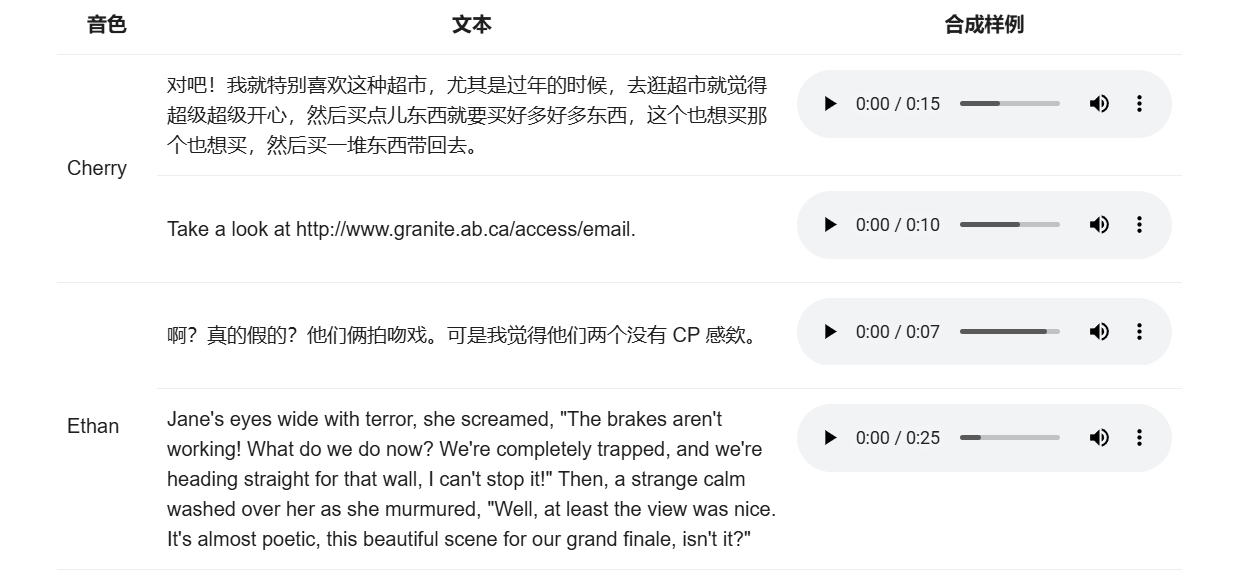

On June 27, 2025, Alibaba Cloud Qwen team released Qwen-TTS (qwen-tts-2025-05-22), a top-tier text-to-speech (TTS) model available via the Qwen API, featuring ultra-natural speech, multi-dialect support, and powerful emotional expression.

Key Highlights

- Ultra-natural Speech: Trained on millions of hours of audio, Qwen-TTS delivers human-like naturalness and emotional expressiveness, with intelligent adaptation of intonation and rhythm.

- Multi-dialect Support: Covers three Chinese dialects—Beijing (Dylan), Shanghai (Jada), Sichuan (Sunny)—and seven bilingual (Chinese/English) voices, all vivid and authentic.

- Developer-friendly: Simple API calls, quick integration, suitable for various scenarios.

Sample Code:

import os

import dashscope

# Set API key (configure in environment variable or assign directly)

dashscope.api_key = os.getenv("DASHSCOPE_API_KEY") # Make sure to set DASHSCOPE_API_KEY in your environment

# Or assign directly (not recommended for production)

# dashscope.api_key = "your-api-key-here"

# Generate Beijing dialect speech with Qwen-TTS

text = "Hey, Curry shoots like it's a game, the hoop is calling him dad!"

response = dashscope.audio.qwen_tts.SpeechSynthesizer.call(

model="qwen-tts-latest",

text=text,

voice="Dylan"

)

# Check response and get audio URL

if response.status_code == 200:

audio_url = response.output.audio["url"]

print(f"Audio URL: {audio_url}")

else:

print(f"Error: {response.message}")API Key Setup Instructions:

- Environment Variable (Recommended): Set

DASHSCOPE_API_KEYin your system environment variables. Useexport DASHSCOPE_API_KEY=your-api-key(Linux/Mac) or set it in Windows environment variables. - Direct Assignment in Code (For Testing): Assign your API key from Alibaba Cloud DashScope platform directly to

dashscope.api_key(registration and application required). - Get your API key: Visit https://dashscope.aliyun.com, log in, and generate a key in the console.

Application Scenarios

- Education & Culture: Supports dialect digitization and language learning.

- Entertainment Creation: Adds regional flavor to dubbing for short videos and games.

- Smart Interaction: Enhances customer service and accessibility, improving user experience.

- Cross-language Support: Chinese-English bilingual voices meet global needs.

More Articles

![OpenAI 12-Day Technical Livestream Highlights Detailed Report [December 2024]](/_astro/openai-12day.C2KzT-7l_1ndTgg.jpg)

OpenAI 12-Day Technical Livestream Highlights Detailed Report [December 2024]

Understanding Core AI Technologies: The Synergy of MCP, Agent, RAG, and Function Call

AI Model Tools Comparison How to Choose Between SGLang, Ollama, VLLM, and LLaMA.cpp?

Ant Design X - React Component Library for Building AI Chat Applications

CES 2024 Review:Revisiting the Tech Highlights of 2024

VLC Automatic Subtitles and Translation (Based on Local Offline Open-Source AI Models) | CES 2025

Chrome(Chromium) Historical Version Offline Installer Download Guide

ClearerVoice-Studio: A One-Stop Solution for Speech Enhancement, Speech Denoising, Speech Separation and Speaker Extraction

CogAgent-9B Released: A GUI Interaction Model Jointly Developed by Zhipu AI and Tsinghua