Meta Launches Llama 3.3: 70B Parameters Rival 405B, with 128K Context Support

Meta has announced the release of its next-generation open-source large language model, Llama 3.3, achieving major breakthroughs in model performance, multilingual capabilities, and context length.

Key Highlights

Compared to its predecessors, Llama 3.3 shows significant improvements in:

- Superior Performance: Achieves 405B-level performance with only 70B parameters

- Extended Context: Supports 128K tokens context window, greatly enhancing long-text processing

- Multilingual Support: Native support for 8 languages, including English, German, French, and more

- Efficient Architecture: Optimized Transformer architecture with GQA attention mechanism

Technical Specifications

Key technical parameters of Llama 3.3:

- Pre-training Data: 15T+ tokens from public online datasets

- Knowledge Cutoff: December 2023

- I/O Modalities: Multilingual text input, text and code output

- Training Method: Combined SFT and RLHF for instruction tuning

- Deployment Options: Supports 4-bit and 8-bit quantization

Performance Metrics

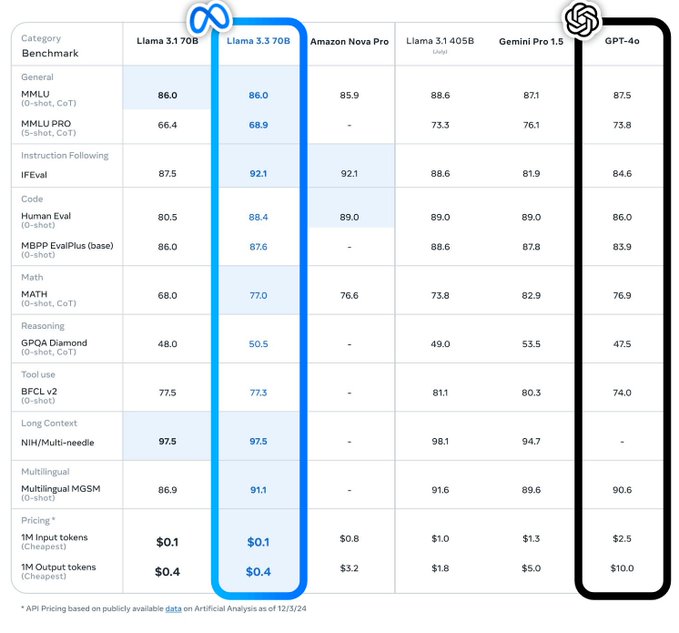

Llama 3.3 demonstrates exceptional performance across key benchmarks:

- MMLU(CoT): Improved from 86.0 to 88.6

- HumanEval: Increased from 80.5% to 88.4%

- MATH(CoT): Advanced from 68.0 to 77.0

- MGSM: Enhanced from 86.9% to 91.1%

Open Source Strategy

Meta adopts a responsible open-source approach:

- Complete model weights and code access

- Open community license

- Commercial and research use support

- Built-in safety mechanisms

Usage Restrictions

Important considerations for using Llama 3.3:

- Meta privacy policy agreement required

- Community guidelines compliance

- Additional licensing for commercial use

- Prohibited for illegal purposes

Meta states that Llama 3.3’s release will accelerate AI democratization, providing developers and researchers worldwide with a more powerful open-source option.

Model Access

The model is available for download on Hugging Face: @meta-llama/Llama-3.3-70B-Instruct

Note: Downloading and using the model requires agreement to Meta’s terms of service and privacy policy.

More Articles

![OpenAI 12-Day Technical Livestream Highlights Detailed Report [December 2024]](/_astro/openai-12day.C2KzT-7l_1ndTgg.jpg)