Hallo3: An Open-Source High-Dynamic Realistic Portrait Animation Model

Project Overview

Hallo3 (Highly Dynamic and Realistic Portrait Image Animation with Diffusion Transformer Networks) is a portrait image animation model developed by the Fudan Generative Vision Lab. Based on diffusion transformer networks, this project combines static photos with audio input to generate highly dynamic and realistic talking head videos, providing robust technical support for digital avatar creation.

The model can be widely applied in various scenarios:

- Digital Avatar Creation: Quickly generate talking digital avatars from a single photo and audio input, suitable for virtual hosts and digital spokespersons

- Education and Training: Transform static teaching materials into engaging video content, enhancing online education interactivity

- Content Creation: Help creators efficiently produce talking head videos, significantly improving content production efficiency

- Marketing Presentations: Provide personalized digital avatar solutions for brand and product presentations

Key Features

-

High Dynamicity: The model generates highly dynamic and naturally fluid facial movements and expressions.

-

Realism: The generated portrait animations feature high realism and detailed expression.

-

Open Source: The project is fully open-source, available for researchers and developers to use and study.

Technical Implementation

Hallo3 is implemented based on the following key technologies:

- Diffusion Transformer Networks architecture

- Advanced animation generation strategies

- High-quality portrait image animation support

System Requirements

- OS: Ubuntu 20.04/Ubuntu 22.04

- CUDA Version: 12.1

- Tested GPU: H100

Pretrained Model Download

You can obtain the required pretrained models through:

- Using huggingface-cli:

cd $ProjectRootDir

pip install huggingface-cli

huggingface-cli download fudan-generative-ai/hallo3 --local-dir ./pretrained_models- Or manually download from these sources:

- hallo3: Main project checkpoints

- Cogvidex: Cogvideox-5b-i2v pretrained model, including transformer and 3d vae

- t5-v1_1-xxl: Text encoder

- audio_separator: Kim Vocal_2 MDX-Net vocal separation model

- wav2vec: Facebook’s audio-to-vector model

- insightface: 2D and 3D face analysis models

- face landmarker: Face detection and mesh model from mediapipe

Installation Steps

- Clone the repository:

git clone https://github.com/fudan-generative-vision/hallo3

cd hallo3- Create and activate conda environment:

conda create -n hallo python=3.10

conda activate hallo- Install dependencies:

pip install -r requirements.txt

apt-get install ffmpegTraining Preparation

Data Preparation

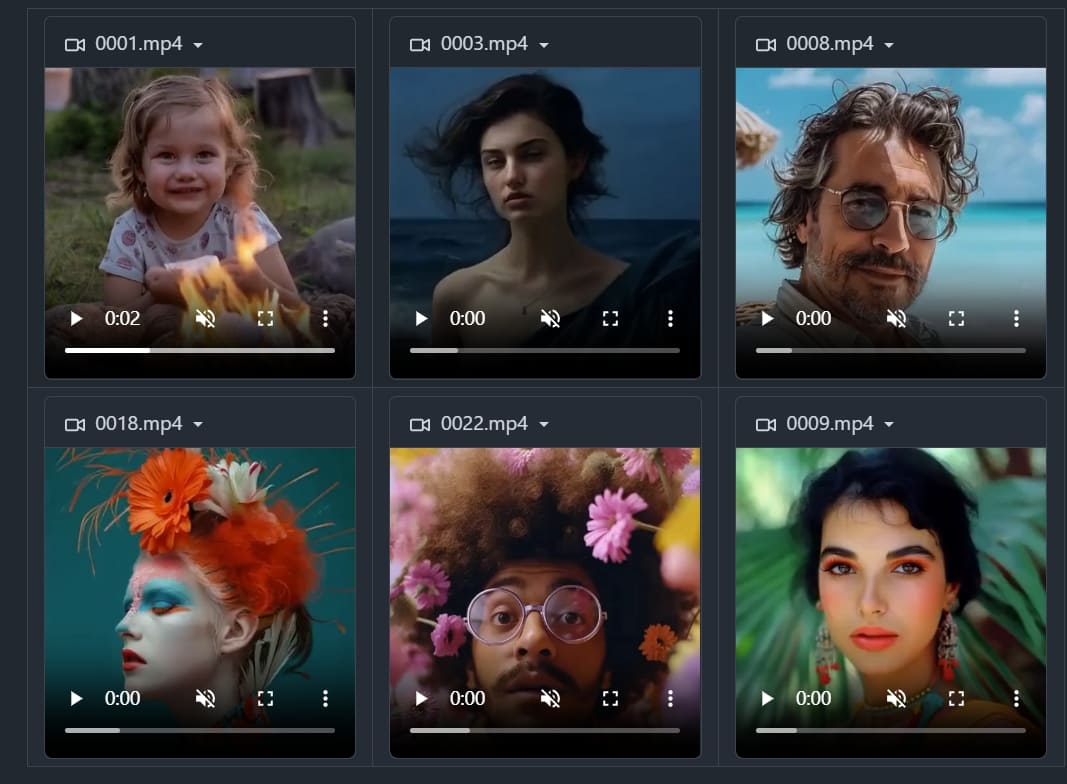

Organize your raw videos in the following directory structure:

dataset_name/

|-- videos/

| |-- 0001.mp4

| |-- 0002.mp4

| `-- 0003.mp4

|-- caption/

| |-- 0001.txt

| |-- 0002.txt

| `-- 0003.txtData Preprocessing

Process the videos using the following command:

bash scripts/data_preprocess.sh {dataset_name} {parallelism} {rank} {output_name}Model Training

- Update the data path settings in configuration files:

In configs/sft_s1.yaml and configs/sft_s2.yaml:

#sft_s1.yaml

train_data: [

"./data/output_name.json"

]

#sft_s2.yaml

train_data: [

"./data/output_name.json"

]- Start training:

# Stage 1 training

bash scripts/finetune_multi_gpus_s1.sh

# Stage 2 training

bash scripts/finetune_multi_gpus_s2.shInference

Requirements

Input data must meet the following conditions:

- Reference image must be 1:1 or 3:2 aspect ratio

- Driving audio must be in WAV format

- Audio must be in

English(as training dataset only contains English) - Ensure clear vocals in the audio (background music is acceptable)

Running Inference

Execute the following command for inference:

bash scripts/inference_long_batch.sh ./examples/inference/input.txt ./outputGenerated animation results will be saved in the ./output directory.

References

More Articles

![OpenAI 12-Day Technical Livestream Highlights Detailed Report [December 2024]](/_astro/openai-12day.C2KzT-7l_1ndTgg.jpg)