GLM-4.5 Technical Report and User Experience: A New Benchmark for Chinese Agent-native Foundation Models

On July 28, 2025, Zhipu AI released its new flagship model GLM-4.5, a foundation model built for agent-native applications. The model is open-sourced simultaneously on Hugging Face and ModelScope under the MIT license, making it highly accessible for commercial use. This article combines the GLM-4.5 technical report and real user experience to provide a comprehensive evaluation from the perspectives of technical breakthroughs, performance, real-world applications, and ecosystem development, exploring its significance in the Chinese large model landscape.

Technical Breakthroughs: Native Agent Capabilities and Efficient Architecture

1. Mixture-of-Experts (MoE) Architecture

GLM-4.5 adopts a Mixture-of-Experts (MoE) architecture and comes in two versions:

GLM-4.5: 355B total parameters, 32B active parameters.GLM-4.5-Air: 106B total parameters, 12B active parameters.

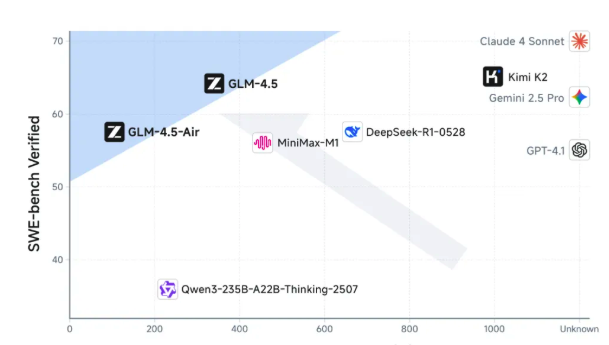

The MoE architecture significantly reduces computational costs through sparse activation while maintaining high performance. Compared to competitors like DeepSeek-R1 (twice the parameters of GLM-4.5) and Kimi-K2 (three times the parameters), GLM-4.5 demonstrates higher parameter efficiency on leaderboards such as SWE-Bench Verified, ranking on the Pareto frontier of performance/parameter ratio. This efficient design gives it clear advantages in inference speed and cost control, with API call prices as low as 0.8 RMB/million input tokens and 2 RMB/million output tokens—much lower than international mainstream models like OpenAI and Anthropic. The high-speed version can generate up to 100 tokens/second, meeting the needs of low-latency, high-concurrency scenarios.

2. Native Agent Capabilities

GLM-4.5 is the first to natively integrate reasoning, coding, and agent capabilities in a single model, positioning itself as an “Agent-native Foundation Model”. Its design philosophy deeply integrates general intelligence abilities such as task understanding, planning and decomposition, tool invocation, and execution feedback, going beyond the boundaries of traditional chatbots. The technical report notes that the model is pretrained on 15 trillion tokens of general data, then further fine-tuned on 8 trillion tokens of code, agent, and reasoning data, and optimized via reinforcement learning for tool use and multi-turn task execution.

The post-training phase employs Supervised Fine-Tuning (SFT), Reinforcement Learning (RL), and expert distillation, significantly enhancing the model’s performance in complex reasoning, code generation, and tool-use scenarios. For example, GLM-4.5 supports structured tool calls (such as outputting <tool_call> data structures) and Chain-of-Thought reasoning, making it especially suitable for developing AI Agent or Copilot applications.

3. Compatibility and Open Source Strategy

The API of GLM-4.5 is compatible with mainstream code agent frameworks such as Claude Code, Cline, and Roo Code, reducing migration costs for developers. Model weights are open-sourced on Hugging Face and ModelScope under the MIT license, with minimal commercial restrictions, providing flexible secondary development space for enterprises and individual developers. This open strategy not only promotes ecosystem building but also attracts a large number of developers, helping to create a vibrant application ecosystem.

Technical Details and Module Analysis

Pretraining and Data Strategy

GLM-4.5 pretraining is divided into two stages:

- General Pretraining: 15 trillion tokens of general corpus, covering a wide range of text data to ensure general language capability.

- Domain-enhanced Pretraining: Further training on 7 trillion tokens of code and reasoning corpus, optimized for coding and complex reasoning tasks.

After pretraining, the model enters the post-training phase, using medium-scale domain-specific datasets (such as instruction data) for Supervised Fine-Tuning (SFT), enhancing performance on key downstream tasks. This staged training strategy ensures general capability while significantly boosting agent and reasoning specialization.

Reinforcement Learning: Slime Architecture and Agent Optimization

GLM-4.5’s Reinforcement Learning (RL) training is based on Zhipu’s open-source Slime framework, designed for large-scale models and addressing bottlenecks in complex agent tasks. Innovations of Slime include:

- Flexible Hybrid Training Architecture: Supports both synchronous co-located training and asynchronous decoupled training. The asynchronous mode decouples data generation from training, suitable for agent tasks with slow data generation, maximizing GPU utilization.

- Agent-oriented Decoupling: Separates deployment and training engines, running independently on different hardware to solve long-tail latency issues and accelerate long-term agent task training.

- Mixed Precision Acceleration: Data generation uses efficient FP8 format, while training cycles retain BF16 format, improving generation speed without sacrificing quality.

The post-training phase optimizes agent capabilities through supervised fine-tuning and reinforcement learning, merging the general abilities of GLM-4-0414 and the reasoning abilities of GLM-Z1, with a focus on agent coding, deep search, and tool use. The training process includes:

- Reasoning Optimization: Curriculum learning based on difficulty, single-stage RL in a 64K context window (better than progressive scheduling), dynamic sampling temperature, and adaptive clipping for stable strategies on STEM problems.

- Agent Task Training: For information search QA and software engineering tasks, synthetic data is generated via human-in-the-loop extraction and web content obfuscation, while coding tasks are driven by real SWE task feedback.

Performance: Benchmarks and Real-world Scenarios

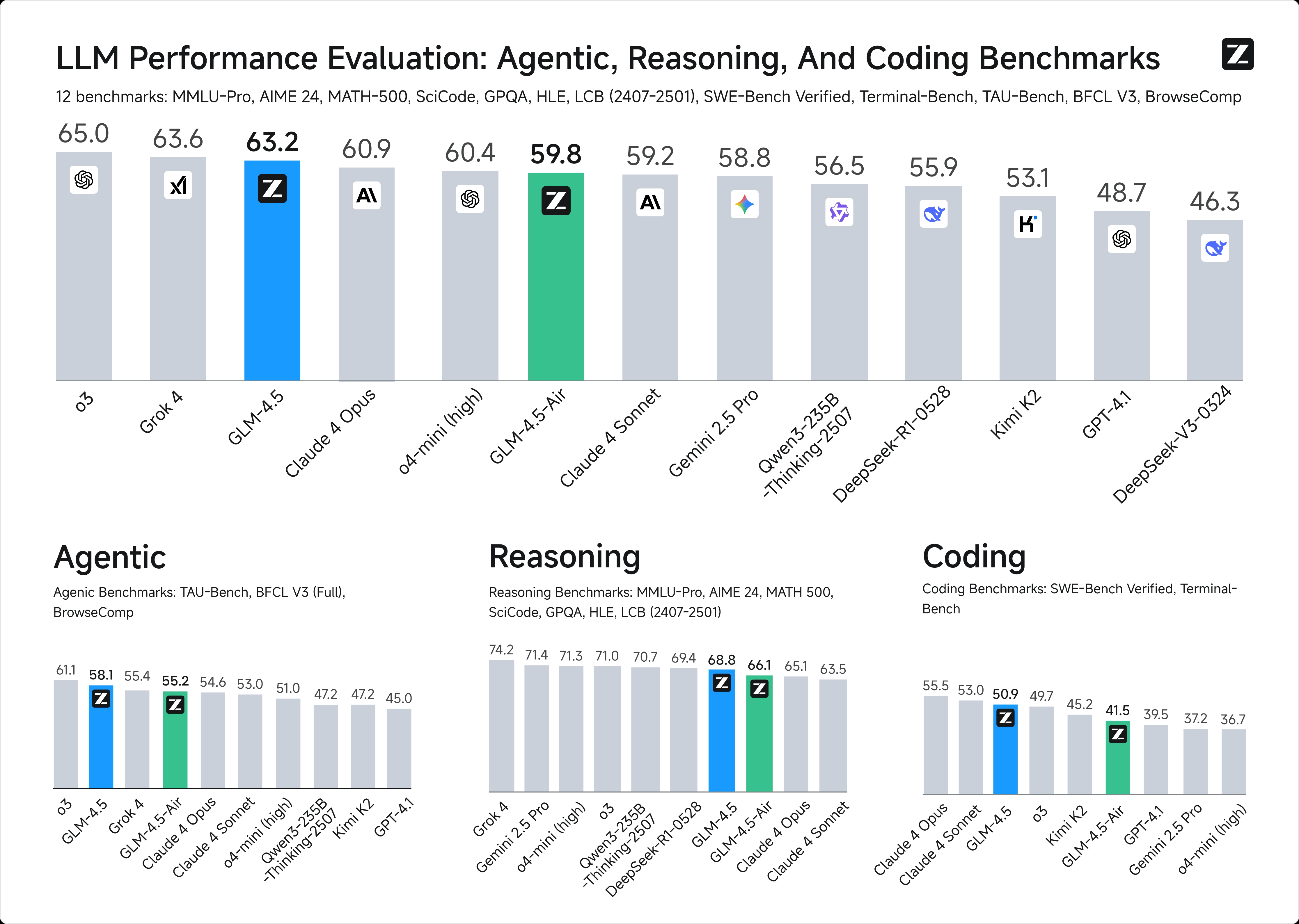

1. Benchmarks: Global Top 3, China No.1

GLM-4.5 ranks third globally, first in China, and first among open-source models in 12 authoritative benchmarks, including MMLU Pro, AIME 24, MATH 500, SciCode, GPQA, HLE, and LiveCodeBench. Especially in reasoning evaluations (such as MATH, GSM8K, BBH), the model demonstrates strong multi-step logical reasoning and mathematical problem-solving abilities.

In coding, GLM-4.5 performs excellently on the SWE-Bench Verified leaderboard, outperforming larger models like DeepSeek-R1 and Kimi-K2. The Zhipu team also organized 52 real-world task evaluations across six development directions, comparing with mainstream models like Claude-4-Sonnet, Kimi-K2, and Qwen3-Coder. Results show GLM-4.5 leads other open-source models in multi-turn interaction, tool-use reliability, and task completion, though there is still a gap with Claude-4-Sonnet.

2. Real-world Performance

User tests show that GLM-4.5 excels in real applications, especially in complex code generation, web development, and creative content generation. For example, users can generate interactive search engines or Bilibili-style web demos with simple prompts like “build a Google search website” or “develop a Bilibili-style web demo”. These cases benefit from the model’s native support for frontend web design, backend database management, and tool-call interfaces.

In creative writing tests (such as the novel “The Last Order of the Scent Blender”), GLM-4.5 performs well in world-building, atmosphere, and plot development. Compared to models like Claude and Gemini, it offers comparable fluency and instruction-following. Users report that its outputs “hardly look like they were written by AI”, especially in visual design and interactive experience.

In mathematical reasoning, GLM-4.5 is rigorous on standardized academic problems (such as college entrance exam math questions), often outputting correct answers directly. When “thinking mode” is enabled, its accuracy in complex reasoning tasks improves further, showing strong logical reasoning ability.

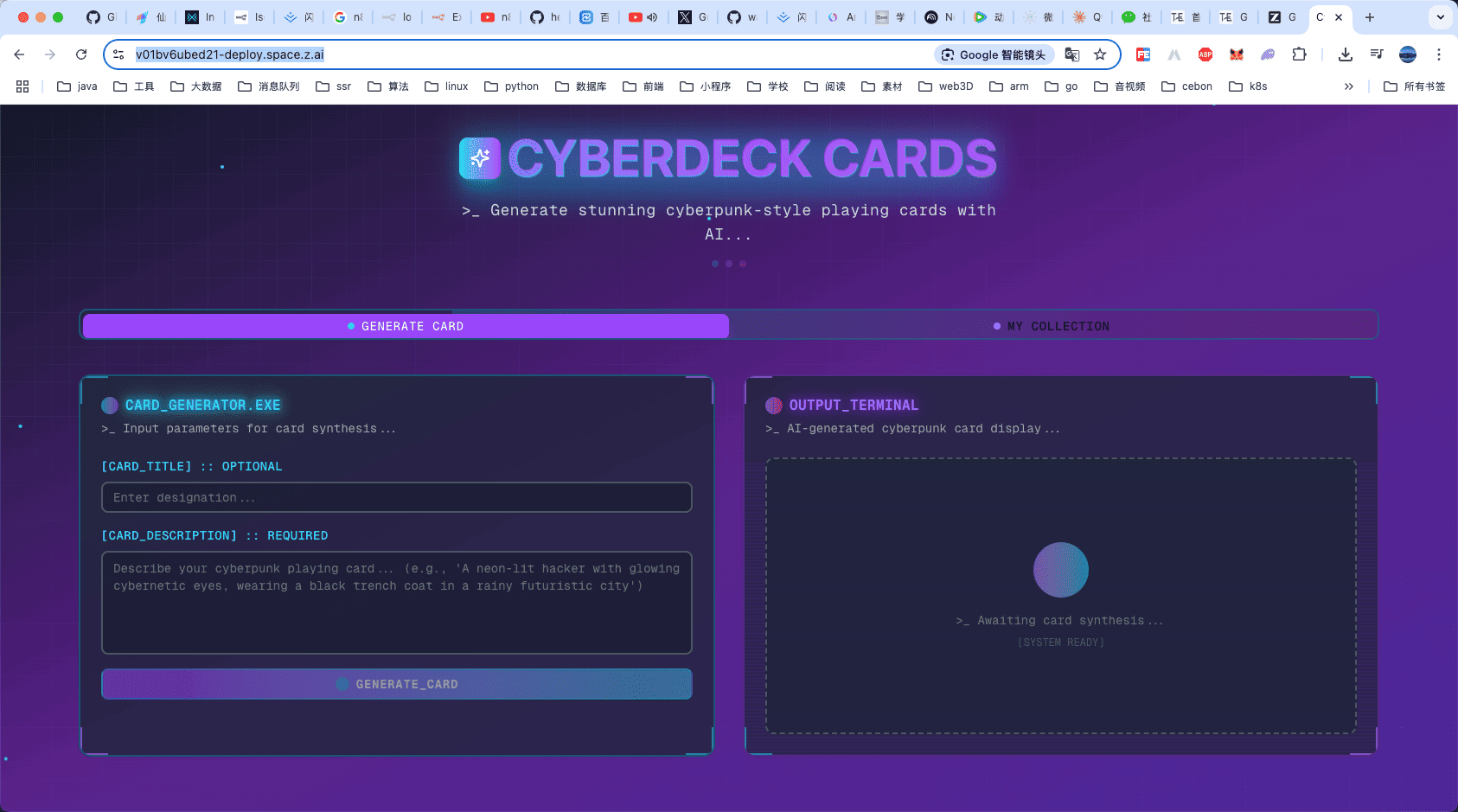

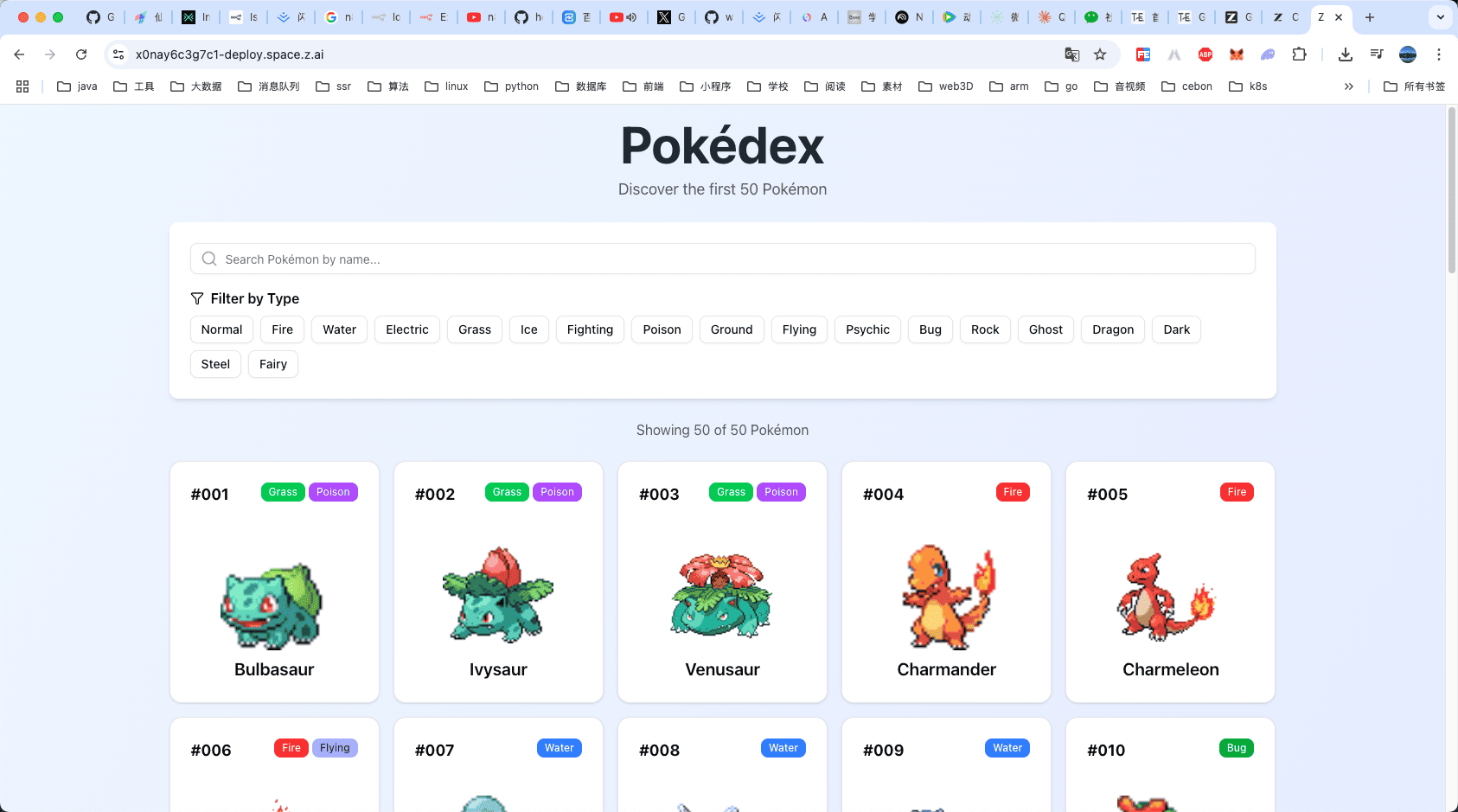

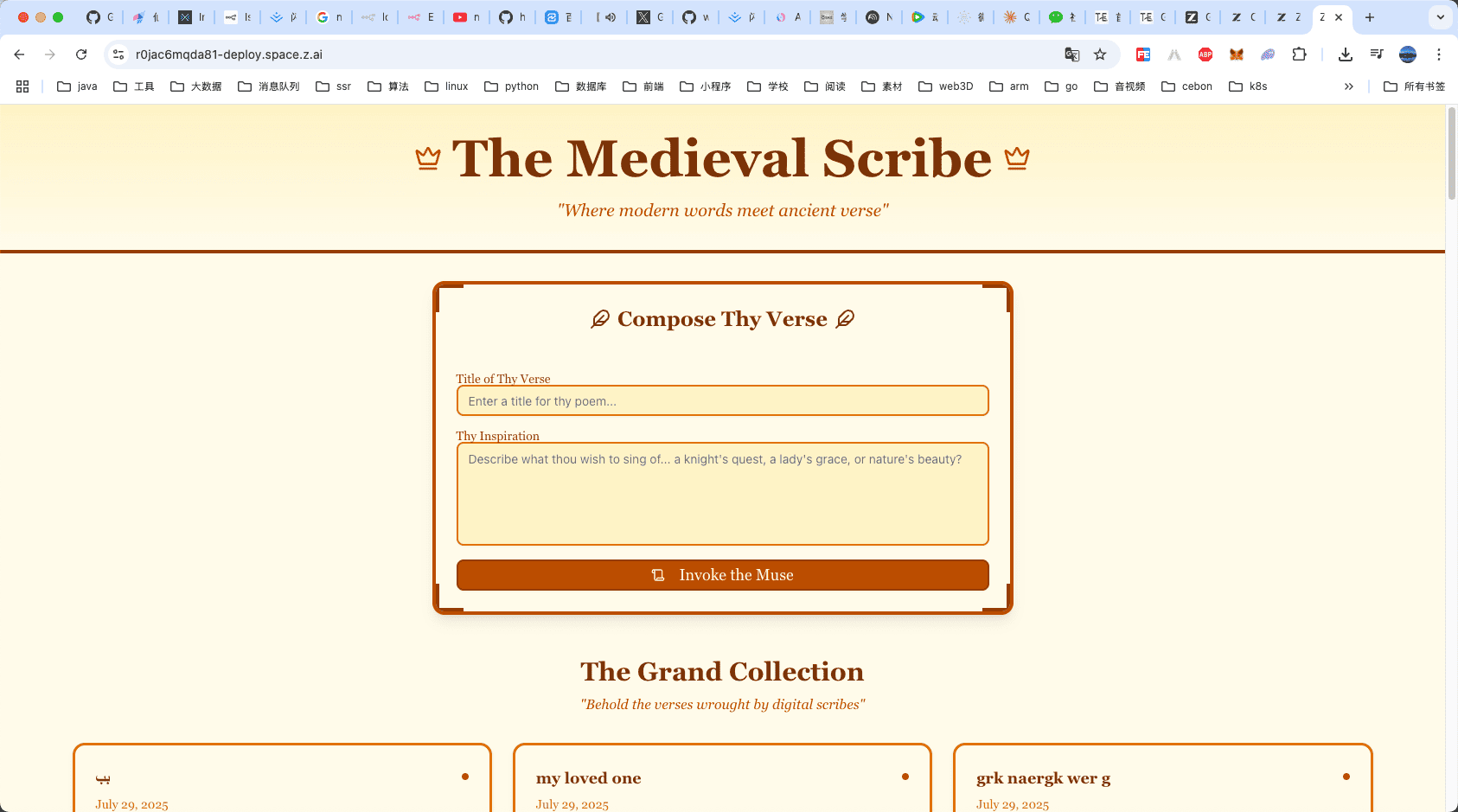

Here are some official examples

- 🧩 Cyberpunk Card AI Generator

- 🌟 Pokémon Pokédex Live

- 📚 Medieval Poetry Gen

User Experience: High Cost-effectiveness and Usability

1. Cost and Efficiency

The low cost and high efficiency of GLM-4.5 are core highlights for users. API pricing is much lower than international competitors, and the high-speed version generates over 100 tokens/second, suitable for low-latency scenarios. Users report smooth online experiences with GLM-4.5 on platforms like Z.ai or Zhipu Qingyan, supporting chat, artifact generation, and full-stack development. For local deployment, the official GitHub provides detailed tutorials for vLLM and SGLang frameworks, lowering technical barriers.

2. Ecosystem and Developer Support

GLM-4.5 attracts many developers through open source and compatibility. Users can call the API on Z.ai or download model weights from Hugging Face for local deployment. In practice, developers use the API in IDEs like Cursor and Trae, with simple configuration and stable operation. Zhipu has also released datasets and agent trajectories for 52 test tasks, facilitating industry validation and reproducibility, further enhancing model transparency and credibility.

Users jokingly call GLM-4.5 the “Gemini Little Model” because it delivers 50-60% of the comfort of Gemini in tasks like text rewriting, interpretation, and translation, and is more stable than some competitors (like DeepSeek). Developers especially appreciate its performance in Chinese tasks and multi-turn reasoning, considering it one of the most promising open-source models for Chinese agent applications.

Significance for Chinese Large Models

The release of GLM-4.5 marks a major breakthrough for Chinese large models in the agent field. Its efficient MoE architecture and native agent capabilities fill the gap in handling complex tasks. Compared to international leaders like Claude-4-Sonnet and GPT-4-Turbo, GLM-4.5 shows significant advantages in Chinese tasks, multi-turn reasoning, and tool use, while lowering enterprise deployment thresholds with low cost and high compatibility.

The open-source strategy further promotes the prosperity of the Chinese AI ecosystem. The adoption of the MIT license and openness on Hugging Face make GLM-4.5 a cornerstone for developer innovation. Zhipu provides a complete API, online experience platform, and detailed deployment tutorials, building a closed-loop ecosystem from R&D to real-world application, offering affordable and effective AI infrastructure for SMEs and individual developers.

Limitations and Outlook

Despite its outstanding performance among open-source models, GLM-4.5 still lags behind top closed-source models (like Claude-4-Sonnet) in some areas, especially in complex multimodal tasks and ultra-long context handling. User feedback notes that the model has yet to exceed expectations in some creative tasks (such as deep character development) and needs further optimization. Although it supports 26 languages, performance in non-Chinese/English scenarios can still be improved.

Looking ahead, Zhipu plans to continue optimizing agent capabilities, enhancing multimodal and long-task execution. The open-sourcing and ecosystem building of GLM-4.5 lay the foundation for the widespread application of Chinese AI, expected to inspire more innovative scenarios and promote AI as a universal productivity tool.

More Articles

![OpenAI 12-Day Technical Livestream Highlights Detailed Report [December 2024]](/_astro/openai-12day.C2KzT-7l_1ndTgg.jpg)